The Drake equation (also sometimes called the "Green Bank equation", the "Green Bank Formula," or often erroneously labeled the "Sagan equation") is a famous result in the speculative fields of exobiology and the search for extraterrestrial intelligence (SETI).

This equation was devised by Dr. Frank Drake (now Professor Emeritus of Astronomy and Astrophysics at the University of California, Santa Cruz) in 1960, in an attempt to estimate the number of extraterrestrial civilizations in our galaxy with which we might come in contact. The main purpose of the equation is to allow scientists to quantify the uncertainty of the factors which determine the number of such extraterrestrial civilizations.

History

Frank Drake formulated his equation in 1960 in preparation for the Green Bank meeting. This meeting, held at Green Bank, West Virginia, established SETI as a scientific discipline. The historic meeting, whose participants became known as the "Order of the Dolphin," brought together leading astronomers, physicists, biologists, social scientists, and industry leaders to discuss the possibility of detecting intelligent life among the stars.

The Green Bank meeting was also remarkable because it featured the first use of the famous formula that came to be known as the "Drake Equation". This explains why the equation is also known by its other names with the "Green Bank" designation. When Drake came up with this formula, he had no notion that it would become a staple of SETI theorists for decades to come. In fact, he thought of it as an organizational tool — a way to order the different issues to be discussed at the Green Bank conference, and bring them to bear on the central question of intelligent life in the universe. Carl Sagan, a great proponent of SETI, utilized and quoted the formula often and as a result the formula is often mislabeled as "The Sagan Equation". The Green Bank Meeting was commemorated by a plaque.

The Drake equation is closely related to the Fermi paradox in that Drake suggested that a large number of extraterrestrial civilizations would form, but that the lack of evidence of such civilizations (the Fermi paradox) suggests that technological civilizations tend to destroy themselves rather quickly. This theory often stimulates an interest in identifying and publicizing ways in which humanity could destroy itself, and then countered with hopes of avoiding such destruction and eventually becoming a space-faring species. A similar argument is The Great Filter,[1] which notes that since there are no observed extraterrestrial civilizations, despite the vast number of stars, then some step in the process must be acting as a filter to reduce the final value. According to this view, either it is very hard for intelligent life to arise, or the lifetime of such civilizations must be depressingly short.

The grand question of the number of communicating civilizations in our galaxy could, in Drake's view, be reduced to seven smaller issues with his equation.

The equation

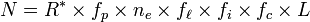

The Drake equation states that:- N is the number of civilizations in our galaxy with which communication might be possible;

- R* is the average rate of star formation in our galaxy

- fp is the fraction of those stars that have planets

- ne is the average number of planets that can potentially support life per star that has planets

- fℓ is the fraction of the above that actually go on to develop life at some point

- fi is the fraction of the above that actually go on to develop intelligent life

- fc is the fraction of civilizations that develop a technology that releases detectable signs of their existence into space

- L is the length of time such civilizations release detectable signals into space.

Alternative expression

The number of stars in the galaxy now, N*, is related to the star formation rate R* by ,

,

R factor

One can question why the number of civilizations should be proportional to the star formation rate, though this makes technical sense. (The product of all the terms except L tells how many new communicating civilizations are born each year. Then you multiply by the lifetime to get the expected number. For example, if an average of 0.01 new civilizations are born each year, and they each last 500 years on the average, then on the average 5 will exist at any time.) The original Drake Equation can be extended to a more realistic model, where the equation uses not the number of stars that are forming now, but those that were forming several billion years ago. The alternate formulation, in terms of the number of stars in the galaxy, is easier to explain and understand, but implicitly assumes the star formation rate is constant over the life of the galaxy.Expansions

Additional factors that have been described for the Drake equation include:- nr or reappearance factor: The average number of times a new civilization reappears on the same planet where a previous civilization once has appeared and ended

- fm or METI factor: The fraction of communicative civilizations with clear and non-paranoid planetary consciousness (that is, those which actually engage in deliberate interstellar transmission)

Reappearance factor

The equation may furthermore be multiplied by how many times an intelligent civilization may occur on planets where it has happened once. Even if an intelligent civilization reaches the end of its lifetime after, for example, 10.000 years, life may still prevail on the planet for billions of years, availing for the next civilization to evolve. Thus, several civilizations may come and go during the lifespan of one and the same planet. Thus, if nr is the average number of times a new civilization reappears on the same planet where a previous civilization once has appeared and ended, then the total number of civilizations on such a planet would be (1+nr), which is the actual factor added to the equation.The factor depends on what generally is the cause of civilization extinction. If it is generally by temporary inhabitability, for example a nuclear winter, then nr may be relatively high. On the other hand, if it is generally by permanent inhabitability, such as stellar evolution, then nr may be almost zero.

In the case of total life extinction, a similar factor may be applicable for fℓ, that is, how many times life may appear on a planet where it has appeared once.

METI factor

Alexander Zaitsev said that to be in a communicative phase and emit dedicated messages are not the same. For example, we, although being in a communicative phase, are not a communicative civilization; we do not practice such activities as the purposeful and regular transmission of interstellar messages. For this reason, he suggested introducing the METI factor (Messaging to Extra-Terrestrial Intelligence) to the classical Drake Equation.Historical estimates of the parameters

Considerable disagreement on the values of most of these parameters exists, but the values used by Drake and his colleagues in 1961 were:- R* = 10/year (10 stars formed per year, on the average over the life of the galaxy)

- fp = 0.5 (half of all stars formed will have planets)

- ne = 2 (stars with planets will have 2 planets capable of supporting life)

- fl = 1 (100% of these planets will develop life)

- fi = 0.01 (1% of which will be intelligent life)

- fc = 0.01 (1% of which will be able to communicate)

- L = 10,000 years (which will last 10,000 years)

The value of R* is determined from considerable astronomical data, and is the least disputed term of the equation; fp is less certain, but is still much firmer than the values following. Confidence in ne was once higher, but the discovery of numerous gas giants in close orbit with their stars has introduced doubt that life-supporting planets commonly survive the creation of their stellar systems. In addition, most stars in our galaxy are red dwarfs, which flare violently, mostly in X-rays—a property not conducive to life as we know it (simulations also suggest that these bursts erode planetary atmospheres). The possibility of life on moons of gas giants (such as Jupiter's moon Europa, or Saturn's moon Titan) adds further uncertainty to this figure.

Geological evidence from the Earth suggests that fl may be very high; life on Earth appears to have begun around the same time as favorable conditions arose, suggesting that abiogenesis may be relatively common once conditions are right. However, this evidence only looks at the Earth (a single model planet), and contains anthropic bias, as the planet of study was not chosen randomly, but by the living organisms that already inhabit it (ourselves). Whether this is actually a case of anthropic bias has been contested, however; it might rather merely be a limitation involving a critically small sample size, since it is argued that there is no bias involved in our asking these questions about life on Earth. Also countering this argument is that there is no evidence for abiogenesis occurring more than once on the Earth—that is, all terrestrial life stems from a common origin. If abiogenesis were more common it would be speculated to have occurred more than once on the Earth. In addition, from a classical hypothesis testing standpoint, there are zero degrees of freedom, permitting no valid estimates to be made.

One piece of data which would have major impact on fl is the discovery of life on Mars or another planet or moon. If life were to be found on Mars which developed independently from life on Earth it would imply a higher value for fl. While this would improve the degrees of freedom from zero to one, there would remain a great deal of uncertainty on any estimate due to the small sample size, and the chance they are not really independent.

Similar arguments of bias can be made regarding fi and fc by considering the Earth as a model: intelligence with the capacity of extraterrestrial communication occurs only in one species in the 4 billion year history of life on Earth. If generalized, this means only relatively old planets may have intelligent life capable of extraterrestrial communication. Again this model has a large anthropic bias and there are still zero degrees of freedom. Note that the capacity and willingness to participate in extraterrestrial communication has come relatively "quickly", with the Earth having only an estimated 100,000 year history of intelligent human life, and less than a century of technological ability.

fi, fc and L, like fl, are guesses. Estimates of fi have been affected by discoveries that the solar system's orbit is circular in the galaxy, at such a distance that it remains out of the spiral arms for hundreds of millions of years (evading radiation from novae). Also, Earth's large moon may aid the evolution of life by stabilizing the planet's axis of rotation. In addition, while it appears that life developed soon after the formation of Earth, the Cambrian explosion, in which a large variety of multicellular life forms came into being, occurred a considerable amount of time after the formation of Earth, which suggests the possibility that special conditions were necessary. Some scenarios such as the Snowball Earth or research into the extinction events have raised the possibility that life on Earth is relatively fragile. Again, the controversy over life on Mars is relevant since a discovery that life did form on Mars but ceased to exist would affect estimates of these terms.

The astronomer Carl Sagan speculated that all of the terms, except for the lifetime of a civilization, are relatively high and the determining factor in whether there are large or small numbers of civilizations in the universe is the civilization lifetime, or in other words, the ability of technological civilizations to avoid self-destruction. In Sagan's case, the Drake equation was a strong motivating factor for his interest in environmental issues and his efforts to warn against the dangers of nuclear warfare.

By plugging in apparently "plausible" values for each of the parameters above, the resultant expectant value of N is often (much) greater than 1. This has provided considerable motivation for the SETI movement. However, we have no evidence for extraterrestrial civilizations. This conflict is often called the Fermi paradox, after Enrico Fermi who first asked about our lack of observation of extraterrestrials, and motivates advocates of SETI to continually expand the volume of space in which another civilization could be observed.

Other assumptions give values of N that are (much) less than 1, but some observers believe this is still compatible with observations due to the anthropic principle: no matter how low the probability that any given galaxy will have intelligent life in it, the universe must have at least one intelligent species by definition otherwise the question would not arise.

Some computations of the Drake equation, given different assumptions:

- R* = 10/year, fp = 0.5, ne = 2, fl = 1, fi = 0.01, fc = 0.01, and L = 50,000 years

- N = 10 × 0.5 × 2 × 1 × 0.01 × 0.01 × 50,000 = 50 (so 50 civilizations exist in our galaxy at any given time, on the average)

- R* = 10/year, fp = 0.5, ne = 2, fl = 1, fi = 0.001, fc = 0.01, and L = 500 years

- N = 10 × 0.5 × 2 × 1 × 0.001 × 0.01 × 500 = 0.05 (we are probably alone)

- R* = 20/year, fp = 0.1, ne = 0.5, fl = 1, fi = 0.5, fc = 0.1, and L = 100,000 years

- N = 20 × 0.1 × 0.5 × 1 × 0.5 × 0.1 × 100,000 = 5,000

Current estimates of the parameters

This section attempts to list best current estimates for the parameters of the Drake equation.R* = the rate of star creation in our galaxy

- Estimated by Drake as 10/year. Latest calculations from NASA and the European Space Agency indicates that the current rate of star formation in our galaxy is about 7 per year.

- Estimated by Drake as 0.5. It is now known from modern planet searches that at least 30% of sunlike stars have planets, and the true proportion may be much higher, since only planets considerably larger than Earth can be detected with current technology. Infra-red surveys of dust discs around young stars imply that 20-60% of sun-like stars may form terrestrial planets.

- Estimated by Drake as 2. Marcy, et al. notes that most of the observed planets have very eccentric orbits, or orbit very close to the sun where the temperature is too high for earth-like life. However, several planetary systems that look more solar-system-like are known, such as HD 70642, HD 154345, or Gliese 849. These may well have smaller, as yet unseen, earth sized planets in their habitable zones. Also, the variety of solar systems that might have habitable zones is not just limited to solar-type stars and earth-sized planets - it is now believed that even tidally locked planets close to red dwarves might have habitable zones, and some of the large planets detected so far could potentially support life - in early 2008, two different research groups concluded that Gliese 581d may possibly be habitable.[7] [8] Since about 200 planetary systems are known, this implies ne > 0.005.

- Even if planets are in the habitable zone, however, the number of planets with the right proportion of elements may be difficult to estimate. Also, the Rare Earth hypothesis, which posits that conditions for intelligent life are quite rare, has advanced a set of arguments based on the Drake equation that the number of planets or satellites that could support life is small, and quite possibly limited to Earth alone; in this case, the estimate of ne would be infinitesimal.

- Estimated by Drake as 1.

- In 2002, Charles H. Lineweaver and Tamara M. Davis (at the University of New South Wales and the Australian Centre for Astrobiology) estimated fl as > 0.13 on planets that have existed for at least one billion years using a statistical argument based on the length of time life took to evolve on Earth. Lineweaver has also determined that about 10% of star systems in the Galaxy are hospitable to life, by having heavy elements, being far from supernovae and being stable themselves for sufficient time.

- Estimated by Drake as 0.01.

- Estimated by Drake as 0.01.

- Estimated by Drake as 10,000 years.

- In an article in Scientific American, Michael Shermer estimated L as 420 years, based on compiling the durations of sixty historical civilizations. Using twenty-eight civilizations more recent than the Roman Empire he calculates a figure of 304 years for "modern" civilizations. It could also be argued from Michael Shermer's results that the fall of most of these civilizations was followed by later civilizations which carried on the technologies, so it's doubtful that they are separate civilizations in the context of the Drake equation. Furthermore since none could communicate over interstellar space, the value of L here could also be argued to be zero.

- The value of L can be estimated from the lifetime of our current civilization from the advent of radio astronomy in 1938 (dated from Grote Reber's parabolic dish radio telescope) to the current date. In 2008, this gives an L of 70 years. However such an assumption would be erroneous. 70 for the value of L would be an artificial minimum based on Earth's broadcasting history to date and would make unlikely the possibility of other civilizations existing. 10,000 for L is still the most popular estimate

- R* = 7/year, fp = 0.5, ne = 2, fl = 0.33, fi = 0.01, fc = 0.01, and L = 10000 years

- N = 7 × 0.5 × 2 × 0.33 × 0.01 × 0.01 × 10000 = 2.31

Criticisms

Since there exists only one known example of a planet with life forms of any kind, several terms in the Drake equation are largely based on conjecture. However, based on Earth's experience, some scientists view intelligent life on other planets as possible and the replication of this event elsewhere is at least plausible. In a 2003 lecture at Caltech, Michael Crichton, a science fiction author, stated that, "Speaking precisely, the Drake equation is literally meaningless, and has nothing to do with science. I take the hard view that science involves the creation of testable hypotheses. The Drake equation cannot be tested and therefore SETI is not science. SETI is unquestionably a religion."However, actual experiments by SETI scientists do not attempt to address the Drake equations for the existence of extraterrestrial civilizations anywhere in the universe, but are focused on specific, testable hypotheses (i.e., "do extraterrestrial civilizations communicating in the radio spectrum exist near sun-like stars within 50 light years of the Earth?").

Another reply to such criticism is that even though the Drake equation currently involves speculation about unmeasured parameters, it stimulates dialog on these topics. Then the focus becomes how to proceed experimentally.

Try The Drake's Equation For Yourself -

http://www.activemind.com/Mysterious/Topics/SETI/drake_equation.html

source : Wikipedia

, is positive if the

, is positive if the